From Smart Vision to Predictive Maintenance

This post discusses AI and ML solutions for industrial monitoring and control.

Evolution of Computer Vision in Automation

The use of computer vision in manufacturing, process control and other automation applications is considered by many a relatively new technology, but it actually dates back to research conducted by MIT in the 1960s into 3D machine vision. Later, in the 1970s, MIT’s Artificial Intelligence (AI) Lab opened a Machine Vision course, which covered subjects such as edge detection and segmentation.

It was also in the 1970s that the term “smart camera” was first coined; used to describe a self-contained vision system featuring an image sensor such as a charge-couple detector (CCD) or CMOS-based active-pixel sensor. Moreover, application-specific smart cameras could sense frequencies outside of human vision; for example, they could be (and are) used for thermal imaging.

Once digitized, images from smart cameras can be interpreted by computers or programmable logic controllers (PLCs). This was an automation gamechanger in the 1980s and contributed greatly to industrial machine-to-machine (M2M) technology; that took off in the 1990s but has its roots in the telecommunications industry in the 1970s. Today, M2M uses public wired and wireless networks—such as Ethernet and cellular, respectively—to share data between machines without human intervention.

Most recently, in the mid-2010s, the fourth industrial revolution (a.k.a. Industry 4.0—see figure 1) began, taking automation to an all-new level. Industry 4.0 also includes digital twins and augmented reality as well as automation within the supply chain—such as ordering parts, materials and transportation.

|

Figure 1 – The above illustrates the four industrial revolutions and the technologies they employed.

Industry 4.0 builds on M2M, smart cameras and other advanced sensing technologies; is heavily underpinned by cyber-physical systems (CPS), big data and cloud computing; and is also strongly associated with the Industrial Internet of Things (IIoT). Moreover, AI and machine learning (ML—a branch of AI) are increasingly contributing to and enhancing Industry 4.0.

Industry 4.0 to 5.0

Smart vision systems are playing crucial roles in high-volume manufacturing and process monitoring/control applications. For example, vison-equipped robots can perform tasks like welding and laser-cutting, while monitoring the quality of the welds/cuts as they go. Moreover, smart vision has also made possible the so-called collaborative robot (cobot).

Where traditional manufacturing robots are concerned, their size (which will typically include safety fencing) and the need for detailed programming for specific tasks can limit their use in some industries. Cobots, on the other hand, do not require safety fencing and they detect the presence of humans through the use of smart cameras and force and proximity sensors. They can also react to specific human motions and voice commands, and, thanks to ML, they are machines that can learn.

Cobots therefore have uses working alongside humans in factories (assembling products, for example) and in warehouse and distribution centers (picking and packaging). Also, while it is hard to imagine anything beyond Industry 4.0 (it is extremely encompassing), cobots are regarded as being integral to the evolution to Industry 5.0—an evolution that is already underway.

The European Union says Industry 5.0 “…provides a vision of industry that aims beyond efficiency and productivity as the sole goals, and reinforces the role and the contribution of industry to society.” Also: “It places the well-being of the worker at the center of the production process and uses new technologies to provide prosperity beyond jobs and growth while respecting the production limits of the planet.”

Predictive Maintenance

The inclusion of AI and ML in Industry 4.0 (and the emerging Industry 5.0) is helping companies embrace the benefits of reduced downtime and higher throughput that come from predictive maintenance. For example, most industrial robotic equipment experience high levels of vibration, which can lead to degradation of components and systems over time. Factory operations personnel looking to increase their awareness of the impact of this vibration can use AI/ML analytics to interpret data, identify problems before they occur and address issues in a timely and cost-effective manner before a system failure.

Specifically, a predictive maintenance strategy—one that includes monitoring vibration levels, temperature, current draw and any other parameter that might be indicative of wearing/failing parts—allows manufacturers to move away from the traditional approach of scheduling maintenance at regular intervals, which often sees parts replaced while they still have plenty of life in left in them. That approach errs on the side of caution but is not particularly cost effective or environmentally friendly, nor does the approach fully mitigate against unexpected failures.

As robotic systems in modern manufacturing become more complex, factory managers need to be aware of their maintenance requirements in real time and be on top of both routine and critical service scheduling in order to avoid an interruption in service and to get more use out of parts. Furthermore, the constant monitoring of key system parameters means that, in the event of a rapidly manifesting fault condition, equipment alarms can be raised or, if need be, machinery (where the fault is as well as downstream equipment) can be automatically shut down to prevent damage.

Over the years, manufacturing and service personnel have become accustomed to the advances offered by computerized maintenance management systems (CMMS) and reliability-centered maintenance (RCM) approaches that are fed by operational data. The move to AI/ML-based predictive maintenance models, fed by a wealth of real-time data, makes more use of parts that wear (and other consumables, such as oils) so it is more cost-effective and provides better protection against unexpected failures.

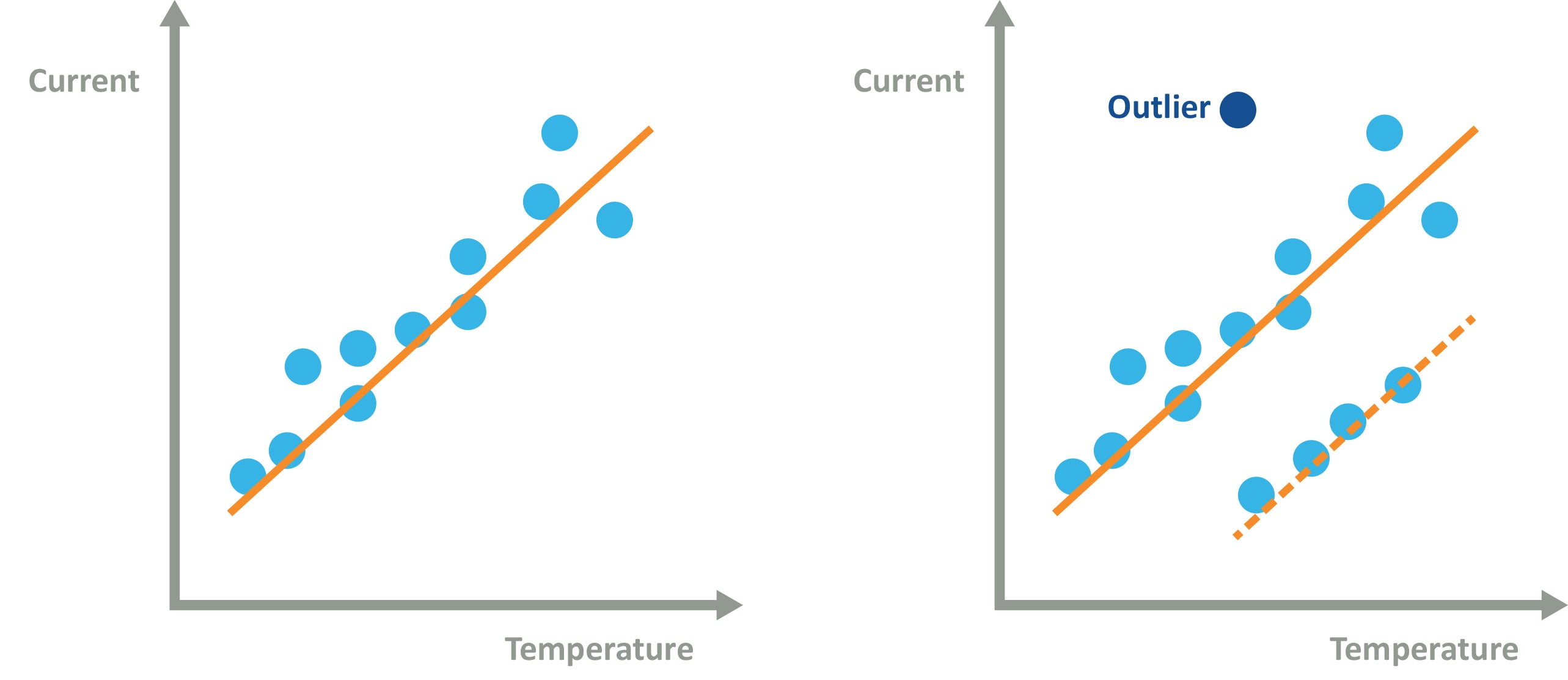

In addition, because AI and ML can infer things—things they have not necessarily been taught—they are great at anomaly detection and spotting outliers. For instance, consider the monitoring of a motor’s temperature and current draw. Both parameters being monitored would have limits, above which an alarm would be raised. Current (draw) and temperature would be expected to change together depending on the mechanical load on the motor, i.e. plotting current against temperature on a graph would result in all data points being on or close to a line representative of the relationship between temperature and current. But what if an anomaly starts developing or an outlier occurs? See Figure 2.

|

Figure 2 – Anomalies are indicative of a change in behavior, and warrant investigation. Outliers tend to be caused by chance (erroneous data samples, for example), and need to be noted but not necessarily actioned

Both temperature and current might be well within their respective limits, but outliers and anomalies are indicative of something amiss, and AI/ML is great at spotting that.

Building Blocks and Workflow

The advanced capabilities of smart vision and predictive maintenance are being embraced in factories around the world, as well as being used in a variety of other applications including security, automotive, aerospace, renewables and transportation.

As for the systems deployed, they require fundamental (electronic) building blocks in order to support AI and ML (increasingly performed “at the edge,” i.e. at the data source and where action needs to be taken). And whereas most microcontrollers and microprocessors (MCUs and MPUs, respectively) are suitable for a traditional manufacturing robot, a cobot needs something more.

For example, our SAMA5D27 MPU can be deployed to drive the robotic operations for typical factory automation scenarios such as moving, cutting, bending, pressing or connecting pieces or parts altogether. In addition, the SAMA5D27 also collects and processes sensor data, reports the status of its operation, supports directives, provides an emergency shut down mode and monitors its environment for actionable maintenance-related information.

However, embedding ML is more than just having MCUs or MPUs capable of hosting the algorithms and models. An integrated workflow is required, one that streamlines ML model development. And with this in mind, in 2023, we launched our MPLAB Machine Learning Development Suite, a software toolkit that can be utilized across the portfolio of MCUs and MPUs. It supports not just 32-bit MCUs and MPUs—which you would normally associate with ML—but also 16- and even 8-bit devices.

Here’s how MPLAB would be used within an integrated workflow.

- Data collection: Edge devices equipped with sensors collect relevant data about the equipment or system being monitored. This data can include sensor readings, temperature, vibration, pressure or any other relevant parameters. This data is imported into MPLAB as a raw or .csv file.

- Data preprocessing: In the MPLAB Machine Learning Development Suite, collected data is preprocessed to clean, normalize and transform it into a suitable format for analysis. This may involve removing outliers (as discussed above), handling missing values or scaling the data.

- Feature extraction: Relevant features are extracted from the preprocessed data. Feature extraction techniques can include statistical analysis, time series analysis, Fourier transforms, wavelet analysis or other domain-specific methods. This is also done within the development suite.

- Model development: ML models, such as classification or anomaly detection algorithms, are developed in the suite using the extracted features. This can involve techniques such as decision trees, neural networks or ensemble methods. The suite automatically optimizes the model for memory size and accuracy depending on the MCU or MPU being targeted.

- Model training and updating: The ML model is trained using historical data and considers both normal and faulty operating conditions. The model can also be taught to recognize analogous conditions in the form of unseen data.

The next three stages of the ML workflow do not involve MPLAB, but are worthy of discussion:

- Model deployment: The trained model is deployed on the MCU or MPU used in the edge device, allowing it to make real-time predictions or detect anomalies locally without relying on a centralized server. This enables faster response times and reduces dependence on network connectivity.

- Alert generation and decision making: Based on the predictions or detected anomalies from the deployed model, the model can generate alerts or notifications on the edge device. This allows for quick response and decision making, such as scheduling maintenance activities or taking corrective actions to prevent equipment failure.

- Continuous monitoring and optimization: The edge device continues to monitor the equipment or system in real time and collects new data. Based on the shift between the baseline and new data, the ML model can be retrained and updated. This iterative process helps to continuously improve the accuracy and effectiveness of the predictive maintenance system.

Wireless connectivity between parts of the factory and connectivity to the cloud are required in most smart vision and predictive maintenance scenarios, so let’s consider some other building blocks.

Our IEEE 802.11 b/g/n IoT network controllers deliver reliable Wi-Fi and network capabilities and connect to any of the company’s SAM or PIC MCUs with minimal resource requirements. With fully integrated power amplifier, low noise amplifiers and switch and power management features, these devices also provide internal Flash memory to store firmware.

Also, our SAM R30 series of products utilize IEEE 802.15.4 in the sub-GHz channels of the industrial, scientific and medical (IS) band of radio spectrum, and their low-power sleep modes consume less than 1µA.

Comprehensive Ecosystem

The development of a smart vision, a smart predictive maintenance system or any other AI/ML-enabled platform requires an ecosystem that includes hardware, software, development environments, evaluation boards, development kits reference designs and technical support.

Figure 3: Microchip operates a formal AI/ML design partner program

We have created such an ecosystem, which not only includes our own products, tools and support services but extends to formal partnership programs with companies specializing in areas such as predictive maintenance, human machine interface (HMI), process control, gesture recognition and sensor data analytics.