Building the AI Server

Solutions for high-bandwidth interconnect and NVM storage.

Powering Advanced Workloads with AI Servers

Artificial intelligence (AI) is being adopted across all industry sectors and the growing need to run AI (as well as machine learning, or ML) workloads is placing considerable demands on servers. Indeed, the AI server market was valued at $38.3 billion in 2023 and is estimated by Global Market Insights to have a CAGR of over 18% between 2024 and 2032.

Understandably, the business models of organizations running AI/ML locally and those providing AI/ML-enabled cloud services rely on fast access to large datasets. Server speed and performance are of paramount importance.

The traditional core hardware elements of a server are one or more central processing units (CPUs, which themselves might be multicore), volatile memory (such as DRAM) for processing, non-volatile memory for data storage, networking interfaces (for access to the cloud or an intranet) and internal connectivity/switches to facilitate fast data transfer between the CPU(s), memory and the networking interfaces.

However, the hosting of AI/ML applications increasingly requires the use of accelerator technologies such as graphics processing units (GPUs). Despite servers not having a display to drive directly, GPUs are generally faster than CPUs. But it is not all about speed. The nature of the processing is important too, and field programmable gate arrays (FPGAs) are being used to parallel-process large volumes of data.

AI accelerator servers are optimized to handle the processing required for different types of AI workloads, but what they have in common is the need to scale and connect multiple cards in a system and allow many processors to work together. Use cases include natural learning processing, predictive analytics (including financial modeling) and deep learning.

Switching

As mentioned, servers require some form of internal interconnect system for high-speed data transfer. This tends to be the Peripheral Computer Interconnect (PCI) Express (PCIe®). It is a point-to-point bidirectional bus for connecting high-speed components. Subject to the system architecture, data transfer can take place over 1, 2, 4, 8 or 16 lanes. Latest generation (6.0) PCIe delivers data (giga) transfer rate of 2.5GT/s which, if 16 lanes are used, provides a bandwidth of 4G/s. Note: PCIe 7.0, slated to arrive in 2025, will have a data rate of 128GT/s and a bandwidth of 512GB/s through 16 lanes.

These high transfer rates enable server designers to balance bandwidth requirements with simplicity (i.e., using fewer lanes for certain applications). AI and ML typically need to take full advantage of a 16-lane architecture. Also, since generation 5.0, PCIe has provided additional capabilities to handle signal integrity issues, such as loss and noise.

Understandably, as AI/ML data sets grow in size, power consumption increases too, so low power modes (like LP0 within PCIe 6.0) is very attractive, as is the wide availability of PCIe-compatible devices. Chipsets (designed with AI/ML in mind) are being frequently launched, both by big industry names and smaller vendors.

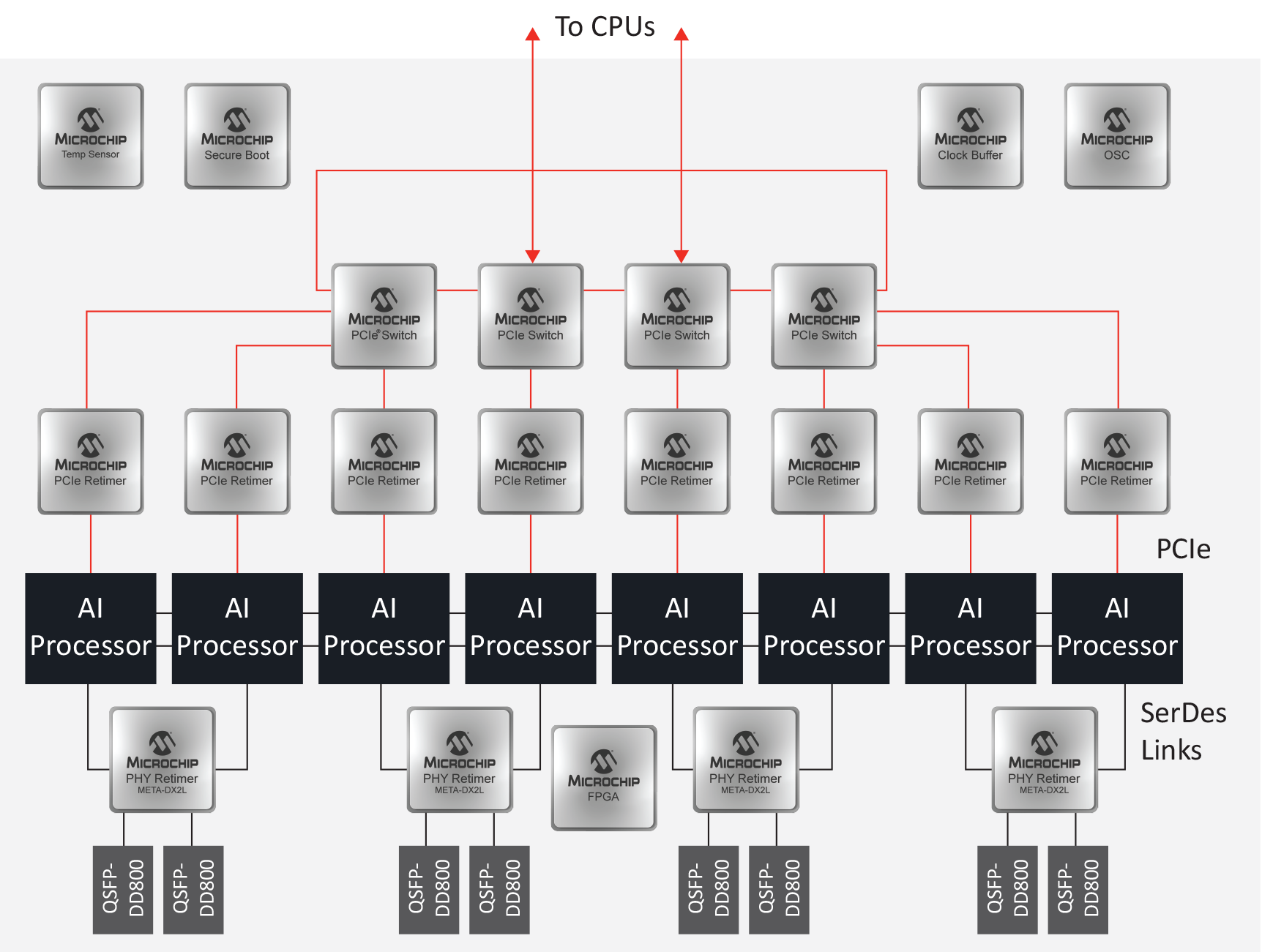

A typical AI processing/acceleration server card will typically include multiple AI processors (as mentioned GPUs but increasingly FPGAs) interconnected by a mesh (or another topology). Each AI processor will have a PCIe interface connecting to one or more host CPUs through PCIe retimers and PCI switches. The AI processors will also connect to (and in most cases share) PHY retimers via serializer/de-serializer (SerDes) links (figure 1).

|

Figure 1: A typical AI processing/acceleration card block diagram

Regarding PCIe switches, several different types are available, typically tailored for specific applications. For example, our Switchtec™ PFX fanout PCIe switches are used extensively in data center (and other high density) servers and for ML applications. Features include robust error containment, hot- and surprise-plug controllers, ECC protection on RAMs and per-port performance and error counters. And building on the capabilities of the PFX fanout devices are customer programmable PCIe switches (Switchtec PSX).

But why might customization be important?

Though servers are versatile, the industry is seeing a rapid uptake in workload-specific AI accelerator hardware (as shown in figure 1). This is a result of the growing popularity of specialized servers (customized, end-to-end solutions) for markets being dubbed micro verticals.

Data Storage

AI and ML applications need access to large amounts of data. During recent years the Non-Volatile Memory express (NVMe) solid-state-drive (SSD) has become the de facto storage technology.

NVMe provides greater bandwidth and lower latency than SAS and SATA, resulting in fewer server bottlenecks compared to standard SSDs and hard disk drives (HDDs). Moreover, the communication protocol was designed to work with flash memory through the PCIe interface.

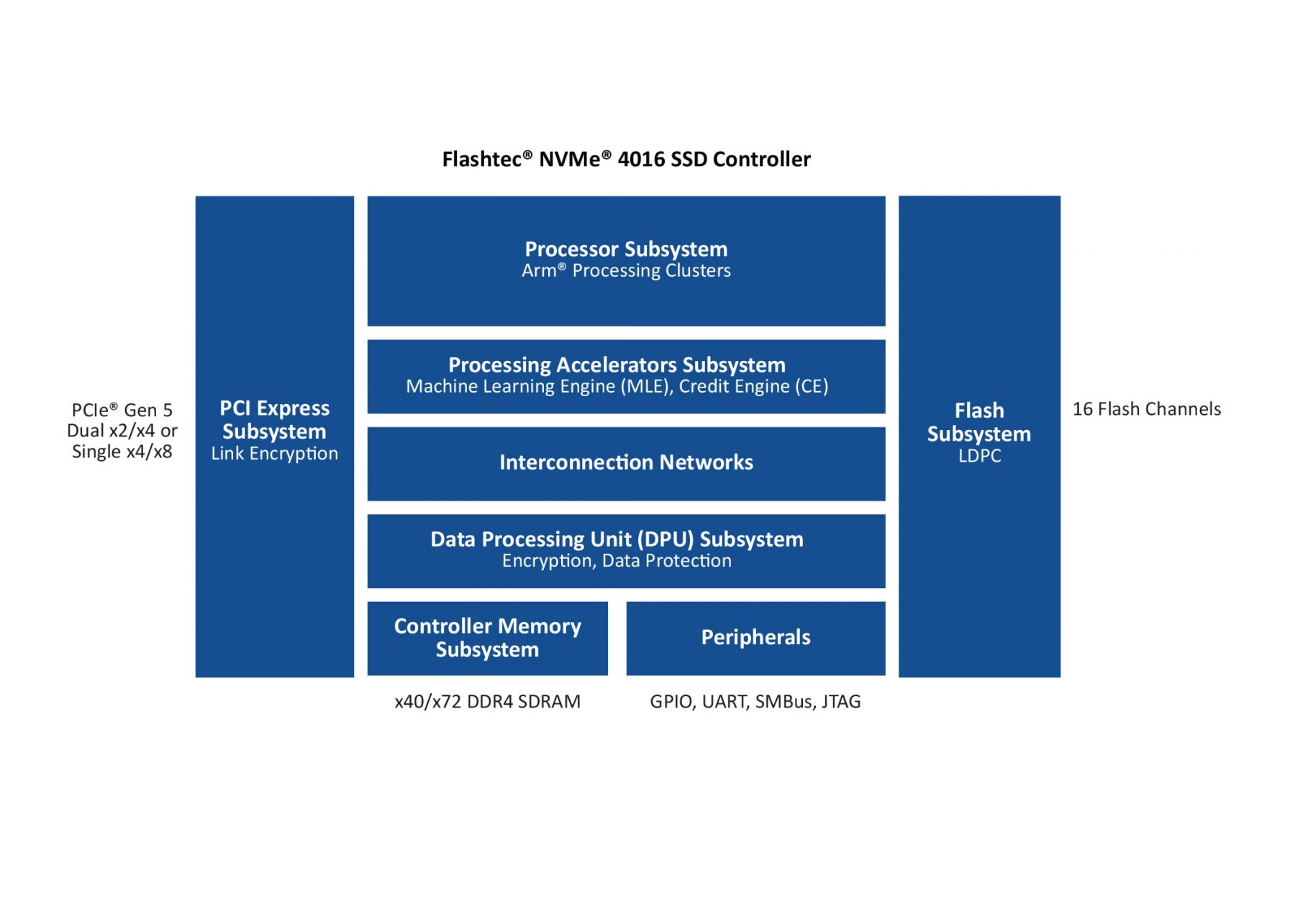

Memory control is, of course, key and here devices such as our Flashtec NVMe 4016 16-channel PCIe (5.0) flash controller (figure 2) can be used. This is a programmable platform that gives developers control for optimization and supports NVMe, cloud and zoned namespace (ZNS) capable SSDs. One of the device’s programmable elements is an ML engine that can perform data classification and pattern recognition tasks, essential for running AI/ML workloads.

Figure 2: The Flashtec NVMe 4016 controller is enabling SSD OEMs to develop SSDs that meet the demands of AI servers

Adoption of NVMe SSDs is becoming a requirement for predictive analysis of fault and recovery mechanism, determination of traffic, optimization of performance and for adaptive NAND management for improving reliability. It is no surprise therefore that AI and ML should be included within the endpoint SSDs.

Other Hardware

Server power consumption has always been a concern within data centers, and with the growing demand to run AI/ML workloads the pressure is on to not only use power-efficient devices but also manage the power available within the architecture. Power management can be achieved through the use of power controllers and conversion ICs. However, power management can lengthen processing times and latency, so there is something of a tradeoff.

Hand in hand with power management goes thermal management. Most servers are air cooled but liquid cooling is becoming popular because it is a more efficient way of removing heat, so energy costs are reduced. It also makes possible higher-density servers. Whether removed by air (fans) or a liquid (pumps), temperature needs to be monitored in order to be controlled, and in this respect temperature sensors are used.

Server hardware also demands cybersecurity. Platforms must be able to evolve to counter cyberattacks during start-up, real-time operation and system updates. In this respect, we offer platform and component “root of trust” solutions to ensure cyber resiliency. They go beyond NIST 800-193 Platform Firmware Resiliency (PFR) guidelines by providing runtime firmware protection that anchors the secure boot process while establishing an entire chain of trust for the system’s platform.

Making a Difference

Innovation and differentiation are key drivers in the evolving data center market and, by extension, the world of servers. Also, hardware is not only recognized as a key differentiator in AI/ML but it accounts for almost half the value of the technology stack.

High bandwidth interconnect through PCIe and low-latency access to data stored in NVMe SSDs are becoming the norm for all high-density servers, but many workload-specific servers require performance that is best achieved through customization; in which case the ability to program PCIe switches and NVMe controllers is ideal.

Want More?

Please visit our web page for more information on PCIe and NVMe.